Hi All,

This question is again related to some of the others I have posted. I have a combinational logic circuit where 9 signals enter a circuit, pass through some number of logic gates (5-6 on any given path) and 7 new signals are output. I am not currently using any clock, however there is an enable signal provided to the clock input of the first chips in this circuit to indicate when to sample the inputs.

I wish to avoid any glitches (static-0 or dynamic) in the output caused by race hazard through the various logic paths (a static 1 glitch is acceptable and will not harm the output of the circuit). The best way I could think to do this is a final AND gate on each output which has the output signal and the enable signal as inputs. This way, all outputs are LOW until enough time has passed for glitches to settle.

To do this, I want to use the same enable signal as I used to trigger the input sampling, but I was to delay it. This delay must be such that the best case delay in the enable circuit is longer than the worst case delay of the logic through any path in the gates. I saw some designs using a chain of buffers, however I was wondering if there was a better way to handle this condition. Buffers in the same logic family that I am using seem to have extremely fast (5-10ns) propagation delays, so a large number of them would be needed to sufficiently delay the circuit.

Is this how people normally handle these conditions? If not, what is a good alternative idea?

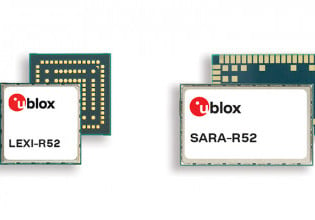

EDIT: Added picture. Latch on the left signifies the input logic sampling (in my case a CD74AC238 3-to-8 decoder). The ENA signal is HIGH when DAT is valid and static. The top path is a combinational logic circuit with some number of gates. The bottom path is my proposed delay with some number of buffers. The AND gate at the end holds the output LOW until the logic has settled on its final value and the ENA delay has expired.

Thanks

This question is again related to some of the others I have posted. I have a combinational logic circuit where 9 signals enter a circuit, pass through some number of logic gates (5-6 on any given path) and 7 new signals are output. I am not currently using any clock, however there is an enable signal provided to the clock input of the first chips in this circuit to indicate when to sample the inputs.

I wish to avoid any glitches (static-0 or dynamic) in the output caused by race hazard through the various logic paths (a static 1 glitch is acceptable and will not harm the output of the circuit). The best way I could think to do this is a final AND gate on each output which has the output signal and the enable signal as inputs. This way, all outputs are LOW until enough time has passed for glitches to settle.

To do this, I want to use the same enable signal as I used to trigger the input sampling, but I was to delay it. This delay must be such that the best case delay in the enable circuit is longer than the worst case delay of the logic through any path in the gates. I saw some designs using a chain of buffers, however I was wondering if there was a better way to handle this condition. Buffers in the same logic family that I am using seem to have extremely fast (5-10ns) propagation delays, so a large number of them would be needed to sufficiently delay the circuit.

Is this how people normally handle these conditions? If not, what is a good alternative idea?

EDIT: Added picture. Latch on the left signifies the input logic sampling (in my case a CD74AC238 3-to-8 decoder). The ENA signal is HIGH when DAT is valid and static. The top path is a combinational logic circuit with some number of gates. The bottom path is my proposed delay with some number of buffers. The AND gate at the end holds the output LOW until the logic has settled on its final value and the ENA delay has expired.

Thanks