I have a processor running at a frequency of 48MHz. So it completes 48 million complete waveforms per second. I do not understand what each wave form "does." Does each waveform have the "capability" of processing 1 bit of data??? I am also confused about how a clock signal actually makes information be processed? I may be getting ahead of myself here but I am very curios. Thanks for any help!

What does each microprocessor clock cycle process?

- Thread starter danielb33

- Start date

Scroll to continue with content

You're off on the wrong track.

In digital electronics we don't think of "waveforms" as such.

A 48MHz clock is more like a drumbeat or a metronome. It dictates that something is to happen every 1/48 μs, about every 20ns. This has nothing to do with bits.

In a classic design, we can break this down to four steps of things to do. Thus (for this example only) we will take four drumbeats to perform one instruction.

(If you are a musician, it is like playing four quarter notes in each bar.)

Our four steps could be something such as:

So in this hypothetical example, the processor is executing instructions at 48/4 = 12 million instructions every second.

In summary, the processor clock is like a orchestral conductor raising the baton up and down, giving each musician a signal as to when to start or stop playing.

In digital electronics we don't think of "waveforms" as such.

A 48MHz clock is more like a drumbeat or a metronome. It dictates that something is to happen every 1/48 μs, about every 20ns. This has nothing to do with bits.

In a classic design, we can break this down to four steps of things to do. Thus (for this example only) we will take four drumbeats to perform one instruction.

(If you are a musician, it is like playing four quarter notes in each bar.)

Our four steps could be something such as:

- Fetch an instruction from program memory

- Decode the instruction

- Fetch data from data memory

- Perform an arithmetic operation

So in this hypothetical example, the processor is executing instructions at 48/4 = 12 million instructions every second.

In summary, the processor clock is like a orchestral conductor raising the baton up and down, giving each musician a signal as to when to start or stop playing.

That makes more sense, but what is the limit? Your step two, "decode the instruction" could take 3 clock cycles, couldn't it? The processor can only do so much in one cycle right? I don't understand how a clock cycle can make some execution happen? Maybe it would make more sense if I new how it "orchestrated."

Well... it depends. Some processors keep everything simple so each and every instruction takes the same number of clocks to complete. Some don't mind letting some complex instructions take extra clocks to complete.That makes more sense, but what is the limit? Your step two, "decode the instruction" could take 3 clock cycles, couldn't it? The processor can only do so much in one cycle right? I don't understand how a clock cycle can make some execution happen? Maybe it would make more sense if I new how it "orchestrated."

Some cheat by using two instruction cycles to complete a single instruction.

Honestly, don't worry about it for now, unless you want to delve deep into processor architecture. May be best to just accept it for now until you get some more of the basics down and it all clicks into place.

Or become a programmer and never thing of it again.

Decoding an instruction is straight forward using combinational circuitry (logic gates). It takes only a portion of a clock cycle to decode the instruction.That makes more sense, but what is the limit? Your step two, "decode the instruction" could take 3 clock cycles, couldn't it? The processor can only do so much in one cycle right? I don't understand how a clock cycle can make some execution happen? Maybe it would make more sense if I new how it "orchestrated."

Modern microprocessors can do many things in the same cycle using a technique called "pipe-lining". Hence many things can be happening in the same cycle.

https://wiki.colby.edu/display/~ekirillo/Assignment+9+-+Final+-+PIC16

this might give you a little more insight to what is going on in the cpu.

this might give you a little more insight to what is going on in the cpu.

You May Also Like

-

Siemens Rolls Out Multi-Discipline Simulation Tool for EV Designs

by Jake Hertz

-

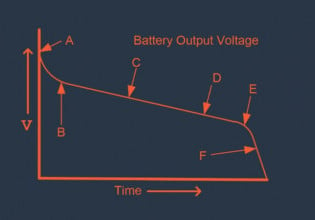

Designing a Battery Pack That’s Right For Your Application

by Jerry Twomey

-

Micron to ‘Revolutionize’ Smart Vehicles With First Quad-Port SSD

by Aaron Carman

-

Microchip’s Portfolio of Integrated Motor Drivers Grows With New Family

by Jake Hertz